- Date: 13.9.2013

- Categories

- Programming languages

- Tools used

Modality-independent Exchange of Information Across Devices (Master Thesis)

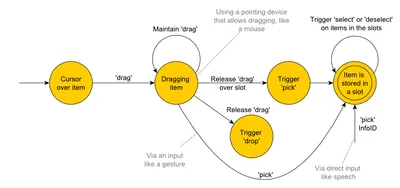

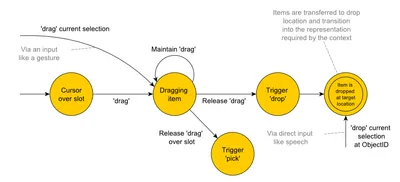

The goal of my master thesis was to develop and evaluate a multimodal user interaction concept called Metamorph utilizing the drag & drop technique across device borders. To make things more interesting, this is no ordinary drag & drop where the dragged information must retain its original representation.

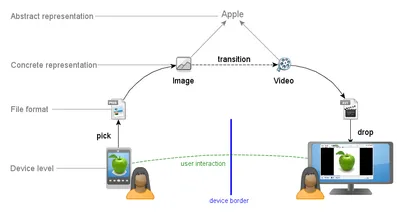

The dragged information can be represented by different media types, depending on the drop context. An example would be to drag the text “apple” and drop it into a droppable area for images. The dragged information would then adapt by switching to an image of an apple and displaying the visual information. One of the main achievements of my master thesis was to devise such an interaction concept, evaluate it with expert users as well as discuss the implications and problems inherent to such an interaction modality.

In other words, the interaction seemingly morphs the informations’ representation to fit the context of the drop target location using meta-data that defines what representations to use in which context. This was developed for an existing prototype system that allows a drag & drop-like interaction across device borders.

Modality-independency

Another point was the ability to spontaneously switch input modalities and devices on demand. For example, when working with a computer using mouse and keyboard to input information the user should be able to leave the computer, pick up a tablet and continue working in the next room inputting the text utilising the touch pad or voice input of the tablet.

Unfortunately, I cannot share the source code this time, as my prototype is heavily dependent on the existing prototype system and interweaved with its code, which is the property of the university of Ulm and part of a larger research project. But, as the focus was the interaction concept and its theoretical elaboration, the most interesting parts of this work can be found in the documentation.

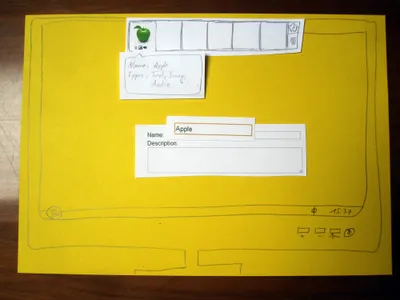

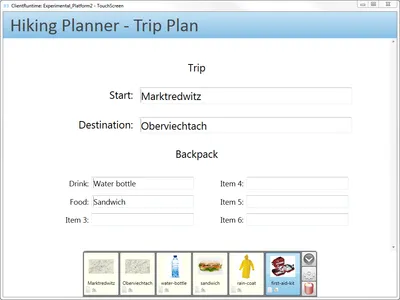

The Prototype System

The existing prototype system offers a model-based approach to user interface generation at runtime. It is a distributed system that uses the SEMAINE API, a message-oriented middleware (MOM), for communication. The system’s interaction management (IM) allows the flexible and dynamic adaption of the user interface to the context of use at runtime. The IM also provides capabilities for automatic speech recognition (ASR), text-to-speech (TTS) output, an XML schema to model device capabilities, adapted GUI components based on the .NET Windows Presentation Foundation (WPF), an event-driven system for dialog handling and finally logging and monitoring capabilities for messages sent via the MOM, for input and output.

Images

Disclaimer

The presentation is provided only in german, there is no english translation available. I assume no responsibility or liability for any errors or omissions in the content of this work. The information contained in this work is provided on an “as is” basis with no guarantees of completeness, accuracy, usefulness or timeliness and without any warranties of any kind whatsoever, express or implied.